Lesson C: Dynamic localization

Main authors: Simon Rohou, Maël Godard (Matlab binding)

We now propose to extend the previous lesson to the dynamic case, i.e. with a mobile robot evolving in the middle of a field of indistinguishable landmarks.

Formalism

The state is now with a fourth dimension for depicting the velocity of the robot. We are now addressing the following state estimation problem, with \(\mathbf{x}\in\mathbb{R}^4\):

The asynchronous measurements \(\mathbf{y}_i\in\mathbb{R}^2\) are obtained for each time instants \(t_i\in[t_0,t_f]\). In this lesson, because the times \(t_i\) are supposed to be exactly known, these constraints will be dealt with as restrictions on tubes. Note that in case of time uncertainties, \(t_i\in[t_i]\), we should have used a specific contractor for reliability purposes.

The evolution function \(\mathbf{f}\) depicts the evolution of the state with respect to some input vector \(\mathbf{u}\):

where \((x_1,x_2)^\intercal\) is the 2d position of the robot, \(x_3\) its heading and \(x_4\) its velocity.

The input \(\mathbf{u}(\cdot)\) is unknown, but we assume that we have continuous measurements for the headings \(x_3(\cdot)\) and the speeds \(x_4(\cdot)\), with some bounded uncertainties defined by intervals \([e_3]=[-0.02,0.02]\), \([e_4]=[-0.02,0.02]\).

Lastly, we do not have any knowledge about the initial position of the robot.

Decomposition

Exercise

C.1. In the first lesson, we wrote a decomposition of the static range and bearing problem. We extend this decomposition to deal with the dynamical case. This will make appear new intermediate variables. We propose the following decomposition:

external trajectory variables: \(\mathbf{x}(\cdot)\in[t_0,t_f]\to\mathbb{R}^4\)

intermediate trajectory variables: \(\mathbf{v}(\cdot)\in[t_0,t_f]\to\mathbb{R}^4\)

external static variables (for each observation): \(\mathbf{y}_i\in\mathbb{R}^2\), \(\mathbf{m}_i\in\mathbb{R}^2\)

intermediate static variables (for each observation): \(a_i\in\mathbb{R}\), \(\mathbf{d}_i\in\mathbb{R}^2\)

constraints:

Initialization

Before going into a set-membership state estimation, we set up a simulation environment to test different random situations.

Exercise

(reuse your code from the previous lesson)

C.2. Using the following code, generate a set of randomly positionned landmarks:

srand() # initialize the random seed (from C++)

N = 50 # number of landmarks

X = IntervalVector([[-40,40],[-40,40]]) # landmarks distribution zone

M = [] # creating the landmarks

for i in range (0,N):

M.append(IntervalVector(X.rand()).inflate(0.2))

fig = Figure2D("Robot simulation", GraphicOutput.VIBES)

fig.set_axes(

axis(0, X[0].inflate(10), "x_1"),

axis(1, X[1].inflate(10), "x_2")

).auto_scale()

for mi in M: # displaying the landmarks

fig.draw_box(mi, [Color.dark_green(),Color.green()])

srand(time(NULL)); // initialize the random seed (from C++)

int N = 50; // number of landmarks

IntervalVector X({{-40,40},{-40,40}}); // landmarks distribution zone

std::vector<IntervalVector> M; // creating the landmarks

for(int i = 0 ; i < N ; i++)

M.push_back(IntervalVector(X.rand()).inflate(0.2));

Figure2D fig("Robot simulation", GraphicOutput::VIBES);

fig.set_axes(

axis(0, X[0].inflate(10), "x_1"),

axis(1, X[1].inflate(10), "x_2")

).auto_scale();

for(const auto& mi : M) // displaying the landmarks

fig.draw_box(mi, {Color::dark_green(),Color::green()});

srand(); % initialize the random seed (from C++)

N = 50; % number of landmarks

X = IntervalVector({{-40,40},{-40,40}}); % landmarks distribution zone

M={}; % creating the landmarks

for i = 1:N

M{i} = IntervalVector(X.rand()).inflate(0.2);

end

fig = Figure2D("Robot simulation", GraphicOutput().VIBES);

fig.set_axes(axis(1, X(1).inflate(10),"x_1"),axis(2, X(2).inflate(10),"x_2")).auto_scale();

for i = 1:numel(M) % displaying the landmarks

fig.draw_box(M{i}, StyleProperties({Color().dark_green(),Color().green()}))

end

C.3. Now, we simulate a mobile robot moving in the middle of the field of landmarks. The trajectory is defined by a set of random waypoints. The class RobotSimulator is used to simplify the simulation:

wpts = [] # creating random waypoints for simulating the robot trajectory

X = IntervalVector([[-35,35],[-35,35]]) # robot evolution zone

for i in range (0,5): # 5 waypoints

wpts.append(X.rand())

s = RobotSimulator()

s.w_max = 0.2 # maximum turning speed

u = SampledVectorTraj() # the simulator will return the inputs (not used)

x_truth = s.simulate(

[0,0,0,0], # initial state (will be supposed unknown)

1e-2, # simulation time step

wpts, u)

std::list<Vector> wpts; // creating random waypoints for simulating the robot trajectory

X = IntervalVector({{-35,35},{-35,35}}); // robot evolution zone

for(int i = 0 ; i < 5 ; i++) // 5 waypoints

wpts.push_back(X.rand());

RobotSimulator s;

s.w_max = 0.2; // maximum turning speed

auto u = SampledTraj<Vector>(); // the simulator will return the inputs (not used)

auto x_truth = s.simulate(

{0,0,0,0}, // initial state (will be supposed unknown)

1e-2, // simulation time step

wpts, u);

wpts={}; % creating random waypoints for simulating the robot trajectory

X = IntervalVector({{-35,35},{-35,35}}); % robot evolution zone

for i = 1:5 % 5 waypoints

wpts{i} = X.rand();

end

s = RobotSimulator();

s.w_max = 0.2; % maximum turning speed

u = SampledVectorTraj(); % the simulator will return the inputs (not used)

x_truth = s.simulate(Vector({0,0,0,0}), 0.01, wpts, u); % initial state (will be supposed unknown) and simulation time step

C.4. We update the observation function g of the previous lessons for the dynamical case. To ease the code, the returned observation vector will contain the observation time \(t_i\).

def g(t,x,M):

obs = [] # several landmarks can be seen at some ti

scope_range = Interval(0,10)

scope_angle = Interval(-PI/4,PI/4)

for mi in M:

r = sqrt(sqr(mi[0]-x[0]) + sqr(mi[1]-x[1]))

a = atan2(mi[1]-x[1], mi[0]-x[0]) - x[2]

# If the landmark is seen by the robot:

if scope_range.is_superset(r) and scope_angle.is_superset(a):

obs.append(IntervalVector([t,r,a]))

return obs

std::vector<IntervalVector> g(double t, const Vector& x, const std::vector<IntervalVector>& M)

{

std::vector<IntervalVector> obs; // several landmarks can be seen at some ti

Interval scope_range(0,10);

Interval scope_angle(-PI/4,PI/4);

for(const auto& mi : M)

{

Interval r = sqrt(sqr(mi[0]-x[0]) + sqr(mi[1]-x[1]));

Interval a = atan2(mi[1]-x[1], mi[0]-x[0]) - x[2];

// If the landmark is seen by the robot:

if(scope_range.is_superset(r) && scope_angle.is_superset(a))

obs.push_back(IntervalVector({t,r,a}));

}

return obs;

}

function obs = g(t,x,M)

obs = {}; % several landmarks can be seen at some ti

scope_range = py.codac4matlab.Interval(0,10);

scope_angle = py.codac4matlab.Interval(-py.codac4matlab.PI/4,py.codac4matlab.PI/4);

for i = 1:numel(M)

mi = M{i};

r = py.codac4matlab.sqrt(py.codac4matlab.sqr(mi(1)-x(1)) + py.codac4matlab.sqr(mi(2)-x(2)));

a = py.codac4matlab.atan2(mi(2)-x(2),mi(1)-x(1)) - x(3);

% If the landmark is seen by the robot:

if scope_range.is_superset(r) && scope_angle.is_superset(a)

obs{end+1} =py.codac4matlab.cart_prod(t,r,a);

end

end

end

C.5. Finally, we run the simulation for generating and drawing the observation vectors. obs contains all the observation vectors.

prev_t = 0.

time_between_obs = 3.

obs = []

t = x_truth.tdomain().lb()

while t < x_truth.tdomain().ub():

if t-prev_t > time_between_obs:

obs_ti = g(t,x_truth(t),M) # computing the observation vector

for yi in obs_ti:

prev_t = yi[0].mid()

fig.draw_pie(x_truth(t).subvector(0,1), yi[1]|0., yi[2]+x_truth(t)[2], Color.light_gray())

fig.draw_pie(x_truth(t).subvector(0,1), yi[1], yi[2]+x_truth(t)[2], Color.red())

obs.extend(obs_ti)

t += 1e-2

double prev_t = 0.;

double time_between_obs = 3.;

std::vector<IntervalVector> obs;

for(double t = x_truth.tdomain().lb() ; t < x_truth.tdomain().ub() ; t += 1e-2)

{

if(t-prev_t > time_between_obs)

{

auto obs_ti = g(t,x_truth(t),M); // computing the observation vector

for(const auto& yi : obs_ti)

{

prev_t = yi[0].mid();

fig.draw_pie(x_truth(t).subvector(0,1), yi[1]|0., yi[2]+x_truth(t)[2], Color::light_gray());

fig.draw_pie(x_truth(t).subvector(0,1), yi[1], yi[2]+x_truth(t)[2], Color::red());

}

obs.insert(obs.end(),obs_ti.begin(),obs_ti.end());

}

}

prev_t = 0.;

time_between_obs = 3.;

obs = {};

t = double(x_truth.tdomain().lb());

tend = x_truth.tdomain().ub();

while t < tend

if t-prev_t > time_between_obs

x_truth_t = x_truth(t);

obs_ti = g(t,x_truth_t,M); % computing the observation vector

for i = 1:numel(obs_ti)

yi = obs_ti{i};

prev_t = yi(1).mid();

fig.draw_pie(x_truth_t.subvector(1,2), yi(2).union(0), x_truth_t(3)+yi(3), Color().light_gray());

fig.draw_pie(x_truth_t.subvector(1,2), yi(2), x_truth_t(3)+yi(3), Color().red());

obs{end+1} = yi;

end

end

t = t + 0.01;

end

State estimation with constraint programming

Exercise

C.6. Domains. We first create the tube \([\mathbf{x}](\cdot)\) that will contain the set of feasible state trajectories. In Codac, a tube is built as a list of slices, the temporal sampling of which is defined as a temporal domain (TDomain) that must be created beforehand. Several tubes can stand on a same TDomain. Then, the tube x is created according to the problem: we do not know its first two components for the positions, but the headings and velocities are known with some bounded errors. We define a function h for this purpose of initialization.

_x = VectorVar(4)

h = AnalyticFunction([_x],

[

# Positions are not known..

Interval(-oo,oo),

Interval(-oo,oo),

# But headings (x3) and velocities (x4) are bounded..

_x[2] + 2e-2*Interval(-1,1),

_x[3] + 2e-2*Interval(-1,1)

])

tdomain = create_tdomain(x_truth.tdomain(), 5e-2, True)

# The tube x is created from the interval evaluation of the actual trajectory

x = h.tube_eval(SlicedTube(tdomain,x_truth))

# The tube v is created as a four-dimensional tube of infinite values

v = SlicedTube(tdomain, IntervalVector([[-oo,oo],[-oo,oo],[-oo,oo],[-oo,oo]]))

VectorVar _x(4);

AnalyticFunction h({_x},

{

// Positions are not known..

Interval(-oo,oo),

Interval(-oo,oo),

// But headings (x3) and velocities (x4) are bounded..

_x[2] + 2e-2*Interval(-1,1),

_x[3] + 2e-2*Interval(-1,1)

});

auto tdomain = create_tdomain(x_truth.tdomain(), 5e-2, true);

// The tube x is created from the interval evaluation of the actual trajectory

SlicedTube x = h.tube_eval(SlicedTube(tdomain,x_truth));

// The tube v is created as a four-dimensional tube of infinite values

SlicedTube v(tdomain, IntervalVector({{-oo,oo},{-oo,oo},{-oo,oo},{-oo,oo}}));

x_ = VectorVar(4);

% Positions are not known, but headings (x3) and velocities (x4) are bounded..

h = AnalyticFunction({x_},vec(Interval(-oo,oo),Interval(-oo,oo), x_(3) + 0.02*Interval(-1,1), x_(4) + 0.02*Interval(-1,1)));

tdomain = create_tdomain(x_truth.tdomain(),0.05, true);

% The tube x is created from the interval evaluation of the actual trajectory

x = h.tube_eval(SlicedTube(tdomain,x_truth));

% The tube v is created as a four-dimensional tube of infinite values

v = SlicedTube(tdomain, IntervalVector(4));

In the above code, the intermediate variable \(\mathbf{v}(\cdot)\) has also its own domain v. It is initialized to \([-\infty,\infty]\) for each dimension.

C.7. Contractors. We re-use the contractors of the previous lesson: \(\mathcal{C}_+\), \(\mathcal{C}_-\), \(\mathcal{C}_{\mathrm{polar}}\), \(\mathcal{C}_{\mathbb{M}}\). It remains to create the contractors related to the tubes (blue constraints \((v)\) and \((vi)\)). One of them is already available in the catalog of contractors of the library: \(\mathcal{C}_{\mathrm{deriv}}\left([\mathbf{x}](\cdot),[\mathbf{v}](\cdot)\right)\) for the constraint \(\dot{\mathbf{x}}(\cdot)=\mathbf{v}(\cdot)\). The other \(\mathcal{C}_\mathbf{f}\) contractor can be defined as previously, according to the evolution function.

ctc_deriv = CtcDeriv()

_x, _v = VectorVar(4), VectorVar(4)

f = AnalyticFunction([_x,_v],

[

_v[0]-_x[3]*cos(_x[2]),

_v[1]-_x[3]*sin(_x[2])

])

ctc_f = CtcInverse(f, [0,0])

# + other contractors from previous lessons:

# ctc_plus, ctc_minus, ctc_polar, ctc_constell

CtcDeriv ctc_deriv;

VectorVar x_(4), v_(4);

AnalyticFunction f({x_,v_},

{

v_[0]-x_[3]*cos(x_[2]),

v_[1]-x_[3]*sin(x_[2])

});

CtcInverse<

IntervalVector, // f output type

IntervalVector,IntervalVector // f inputs (i.e. contractor inputs)

> ctc_f(f, {0,0});

// + other contractors from previous lessons:

// ctc_plus, ctc_minus, ctc_polar, ctc_constell

ctc_deriv = CtcDeriv();

[x_f,v_f] = deal(VectorVar(4),VectorVar(4));

f = AnalyticFunction({x_f,v_f},vec(v_f(1)-x_f(4)*cos(x_f(3)), v_f(2)-x_f(4)*sin(x_f(3))));

ctc_f = CtcInverse(f, Vector([0,0]));

% + other contractors from previous lessons:

% ctc_plus, ctc_minus, ctc_polar, ctc_constell

C.8. Fixpoint resolution. Finally, the propagation loops need to be updated to incorporate the dynamic constraints.

Note that the contractors \(\mathcal{C}_\mathbf{f}\) and \(\mathcal{C}_{\mathrm{deriv}}\) apply to the whole tubes \([\mathbf{x}](\cdot)\) and \([\mathbf{v}](\cdot)\). Furthermore, the class CtcInverse can contract tubes using the .contract_tube(..) method, exactly as we would do for boxes. Finally, a restriction on a tube (i.e. setting a value to a slice) can be done using the .set(y,t) method, for setting the interval vector value y at time t.

def ctc_one_obs(xi,yi,mi,ai,si):

mi,xi,si = ctc_minus.contract(mi,xi,si)

xi[2],yi[2],ai = ctc_plus.contract(xi[2],yi[2],ai)

si[0],si[1],yi[1],ai = ctc_polar.contract(si[0],si[1],yi[1],ai)

mi = ctc_constell.contract(mi)

return xi,yi,mi,ai,si

def ctc_all_obs(x):

global v

for yi in obs:

xi = x(yi[0]) # evalution of the tube at time ti=yi[0]

ai = Interval()

si = IntervalVector(2)

mi = IntervalVector(2)

xi,yi,mi,ai,si = fixpoint(ctc_one_obs, xi,yi,mi,ai,si)

x.set(xi,yi[0]) # restriction on the tube x at time ti=yi[0]

x,v = ctc_f.contract_tube(x,v)

ctc_deriv.contract(x,v)

return x

x = fixpoint(ctc_all_obs, x)

fixpoint([&]() {

for(auto& yi : obs)

{

IntervalVector xi = x(yi[0]); // evalution of the tube at time ti=yi[0]

Interval ai;

IntervalVector si(2);

IntervalVector mi(2); // the identity (position) of the landmark is not known

fixpoint([&]() {

ctc_minus.contract(mi,xi,si);

ctc_plus.contract(xi[2],yi[2],ai);

ctc_polar.contract(si[0],si[1],yi[1],ai);

ctc_constell.contract(mi);

}, xi,yi,mi);

x.set(xi,yi[0]); // restriction on the tube x at time ti=yi[0]

}

ctc_f.contract_tube(x,v);

ctc_deriv.contract(x,v);

}, x);

function [xi,yi,mi,ai,si] = ctc_one_obs(xi,yi,mi,ai,si,ctc_plus,ctc_polar,ctc_minus,ctc_constell)

res_ctc_minus = ctc_minus.contract(py.codac4matlab.cart_prod(mi,xi,si)); % The result is a 8D IntervalVector

[mi,xi,si] = deal(res_ctc_minus.subvector(1,2),res_ctc_minus.subvector(3,6),res_ctc_minus.subvector(7,8));

res_ctc_plus = ctc_plus.contract(py.codac4matlab.cart_prod(xi(3), yi(3), ai)); % The result is a 3D IntervalVector

xi.setitem(3,res_ctc_plus(1));

yi.setitem(3,res_ctc_plus(2));

ai = res_ctc_plus(3);

res_ctc_polar = ctc_polar.contract(py.codac4matlab.cart_prod(si(1),si(2),yi(2),ai)); % The result is a 4D IntervalVector

si.setitem(1,res_ctc_polar(1));

si.setitem(2,res_ctc_polar(2));

yi.setitem(2,res_ctc_polar(3));

ai = res_ctc_polar(4);

mi = ctc_constell.contract(mi);

end

function [x,y,m,a,s] = fixpoint_ctc_one_obs(x,y,m,a,s,ctc_plus,ctc_polar,ctc_minus,ctc_constell)

vol = -1.;

prev_vol = -2.;

while vol ~= prev_vol

prev_vol = vol;

[x,y,m,a,s] = ctc_one_obs(x,y,m,a,s,ctc_plus,ctc_polar,ctc_minus,ctc_constell);

vol = 0.;

[x_vol,y_vol,m_vol,a_vol,s_vol] = deal(x.volume(), y.volume(), m.volume(), a.volume(), s.volume());

if x_vol~=inf

vol = vol + x_vol;

end

if y_vol~=inf

vol = vol + y_vol;

end

if m_vol~=inf

vol = vol + m_vol;

end

if a_vol~=inf

vol = vol + a_vol;

end

if s_vol~=inf

vol = vol + s_vol;

end

if x.is_empty() || y.is_empty() || m.is_empty() || a.is_empty() || s.is_empty()

break

end

end

end

function [x,v] = ctc_all_obs(x,v,obs,ctc_plus,ctc_polar,ctc_minus,ctc_constell,ctc_f,ctc_deriv)

for i = 1:numel(obs)

yi = obs{i};

xi = x(yi(1));

ai = py.codac4matlab.Interval();

si = py.codac4matlab.IntervalVector(2);

mi = py.codac4matlab.IntervalVector(2);

[xi,yi,mi,ai,si] = fixpoint_ctc_one_obs(xi,yi,mi,ai,si,ctc_plus,ctc_polar,ctc_minus,ctc_constell);

x.set(xi,yi(1));

end

res_ctc_f = ctc_f.contract_tube(x,v);

x = res_ctc_f{1};

v = res_ctc_f{2};

ctc_deriv.contract(x,v);

end

function x = fixpoint_ctc_all_obs(x,v,obs,ctc_plus,ctc_polar,ctc_minus,ctc_constell,ctc_f,ctc_deriv)

vol = -1.;

prev_vol = -2.;

while vol ~= prev_vol

prev_vol = vol;

[x,v] = ctc_all_obs(x,v,obs,ctc_plus,ctc_polar,ctc_minus,ctc_constell,ctc_f,ctc_deriv);

vol = x.volume();

if x.is_empty()

break

end

end

end

fixpoint_ctc_all_obs(x,v,obs,ctc_plus,ctc_polar,ctc_minus,ctc_constell,ctc_f,ctc_deriv);

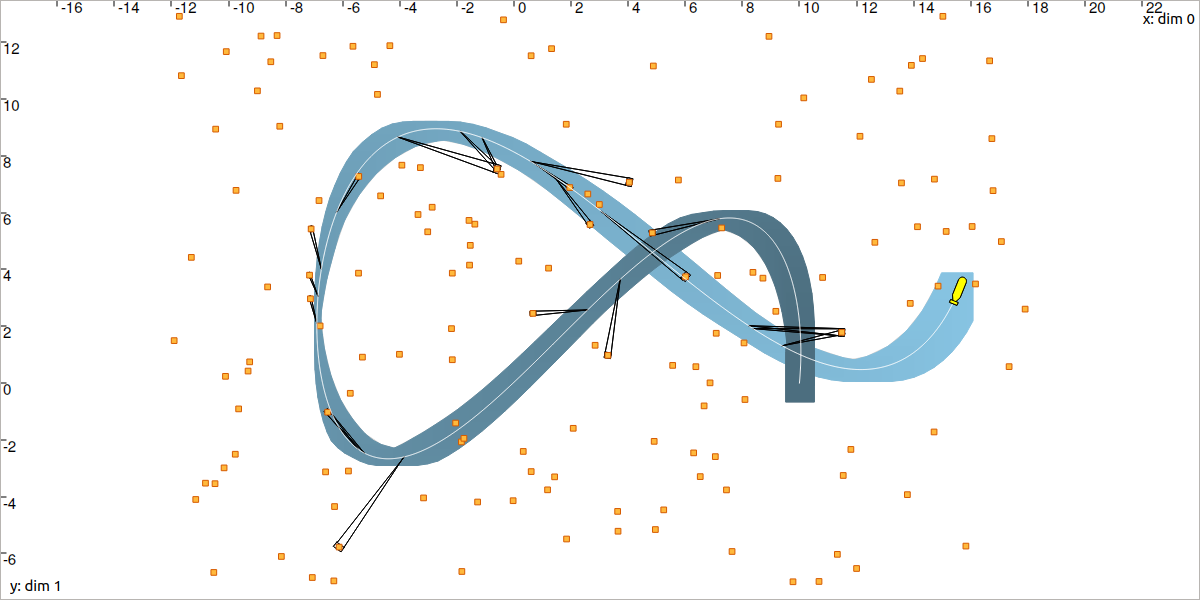

You should obtain a result similar to:

Localization by solving data association: the state trajectory \(\mathbf{x}(\cdot)\) (in white) has been estimated (in blue) together with the identification of the perceived landmarks.

Note that to obtain this exact result it is possible to manually set the random seed to 287.

srand(287)srand(287);srand(int32(287));

Why is this problem of localization and data association difficult?

We do not know the initial condition of the system. Contrary to other approaches, this solver made of contractors does not require some initial vector \(\mathbf{x}_0\) to start the estimation. Information is taken into account from anytime in \([t_0,t_f]\).

The constraints are heterogeneous: some of them are said continuous (they act on continuous domains of values, for instance intervals). Other are discrete (for instance, the identity of landmarks, estimated among a discrete set of \(n\) possible values). And finally, some constraints come from differential equations (for instance for depicting the robot evolution). In this solver, we show that any kind of constraint can be combined, without going into a complex resolution algorithm.

We do not have to linearize, and thus there is no approximation made here. This means that the equations are directly set in the solver, without transformation. Furthermore, the results are reliable: we can guarantee that the actual trajectory is inside the tube \([\mathbf{x}](\cdot)\).